By Mukul Patnaik

November 1st, 2022

Updated on: February 6th, 2023

Since the invention of computers, humans have had a vision of ‘intelligent machines’. In 1950, Alan Turing,

the renowned English mathematician and computer scientist, began a paper with the now-famous provocation

“Can machines think?”. Futurists have long dreamed about how our world could be drastically changed when A.I.

infiltrates the mainstream- a science fiction-esque reality where our lives are optimized by personal A.I.

assistants that we talk to (like ‘JARVIS’ from Iron man), where people barely drive because there’s a fleet

of self-driving cars that you can call at an instant, where all tedious laborious work (growing food, cleaning,

construction…) is done by robots, where even specialized disciplines (doctor, lawyer, engineer) have all been

automated because A.I. is more efficient and doesn’t get sick or take any vacations, basically a post-scarcity world-

and for decades, AI has undergone a number of boom-and-bust cycles, but these dreams never even seemed remotely

possible. That may have just changed over the past few months.

Marc Andreessen famously wrote ‘Software is eating the world’, in 2011 talking about the internet revolution. He was right,

and over the last decade software ate the world, and generated trillions of dollars of GDP and productivity. I believe

it is now A.I.’s turn to do so, and on an exponential scale. We are currently witnessing a boom in the field of

machine learning, the likes of which could be key in establishing the building blocks of the futurist vision above.

This enthusiasm comes off of the recent advancements of deep neural networks, specifically large language models (LLMs),

that are aimed at helping computers to ‘understand’ and even produce human-readable text and more recently, diffusion

models that enable computers to generate images/art from text. Neural networks are nothing new, in fact they are

ubiquitous- from Netflix anticipating what will entertain us, to predicting which TikTok video to show you next,

to forecasting the ETA of your food delivery and even self-driving cars. This has so far been a completely different

application called “Analytical AI”, and has worked great for niche use cases. But this time something feels different-

large language models and Generative AI may truly have the potential to move science forward for public benefit, and to

help us to solve the hardest problems of our time, from climate change and poverty to healthcare and sustainable energy.

‘A Cambrian explosion’

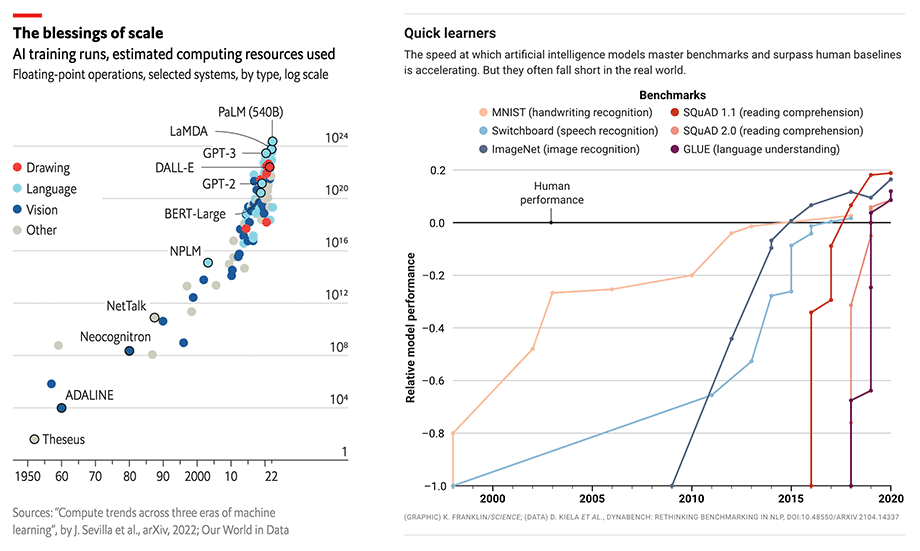

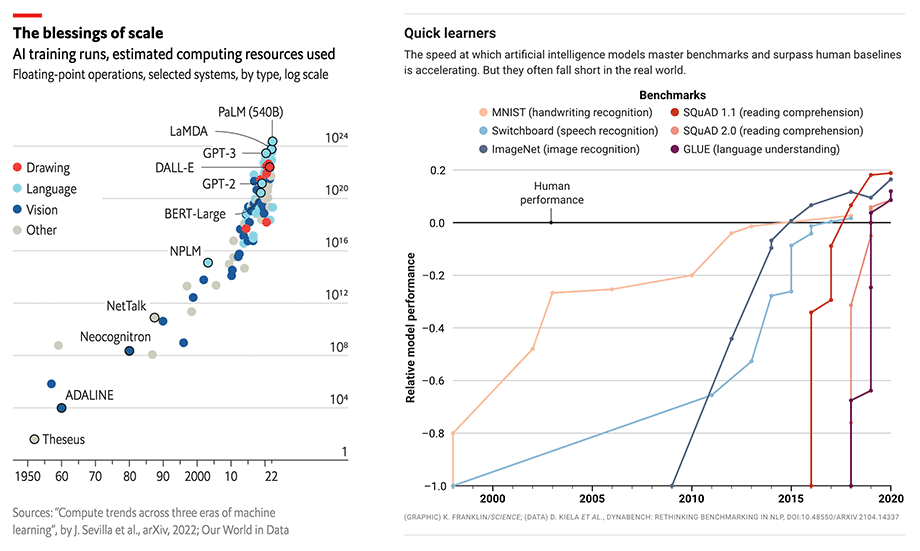

The pivotal moment that revolutionized the industry and set all these events in motion was a seminal 2017 paper named

“Attention is all you need”, that introduced the Transformer —a new neural network architecture that can produce language

models of top standard while taking much less time to train and being more parallelizable. It produced cutting-edge results

on benchmarks and inaugurated a new era in NLP (natural language processing). Google announced that BERT was the

search engine's power source in 2019. These models are easy to adapt to specific domains and are few-shot learners. As

models with larger and larger parameters were being constructed, a "Moore's Law" in language models emerged in the years

that followed, and sure enough, as the models grow larger and larger, they start to provide results that are on par with,

and eventually surpass, those of, humans.

The rapid evolution in the parameters of LLMs. Researchers remarked that the growth in model capabilities as they

scale “feels like a law of physics or thermodynamics” in its stability and predictability.

In July 2020, OpenAI, a California based A.I. research giant, unveiled GPT-3, with a whopping 175 billion parameters and

trained on 570 gigabytes of text scraped from the entire Internet. GPT-3 blew away all expectations and was a new landmark

in sophisticated LLMs. Developers and early users were astonished at its remarkable and unusually large set of capabilities,

like the ability to write better essays than humans, create charts and websites just from text descriptions, text summarization,

generate computer code from simple language, and more — all with limited to no supervision. Recently, GPT-3 even wrote an academic

paper about itself. Future users are likely to discover even more capabilities.

It is difficult to grasp the pace at which these advancements have been occurring. Here are some breakthroughs just in the past few months:

The researchers at OpenAI created a fine-tuned version of GPT-3 called Codex, training it on more than 150 gigabytes of text from the code-sharing platform GitHub. GitHub has now integrated Codex into a service called Copilot that suggests code as people type. They also released DALL-E 2 (the name is an amalgam of the painter Salvador Dali and the movie WALL-E), a diffusion model that allows the user to generate digital sketches and images, derived from simple text prompts. Google announced their language model PaLM can correctly answer multistep reasoning and math problems.

AlphaFold, DeepMind’s protein folding program, solved one of the biggest problems in biology by presenting something truly spectacular: a snapshot of the structure of nearly every existing protein on Earth. In just the past week, AI researchers from Meta Platforms Inc. and Google have taken an even bigger leap forward, developing systems that can now generate entire videos with just about any text prompt one can imagine!”

‘A Renaissance in Digital Art’

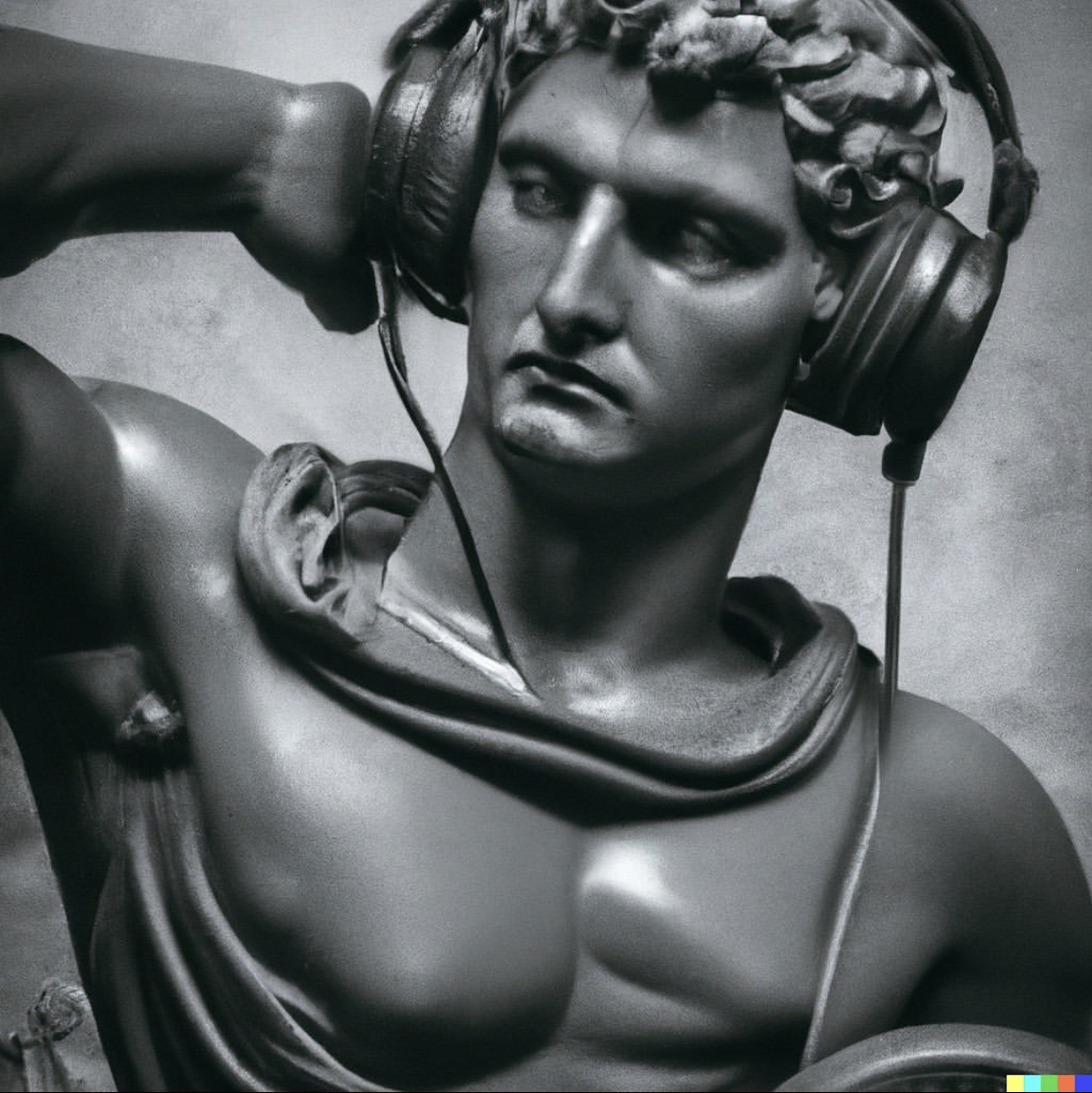

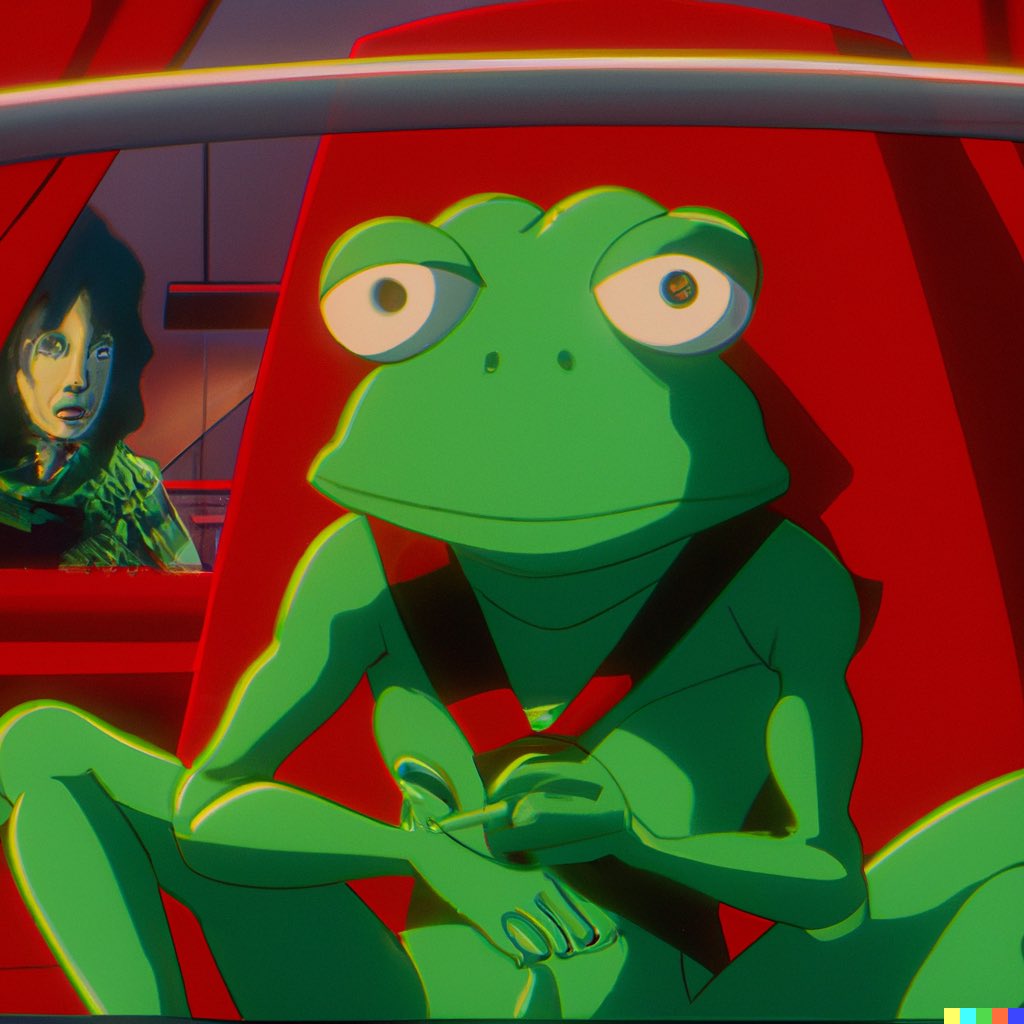

Up until recently, machines were previously only capable of doing analysis and repetitive cognitive tasks, giving them

no chance to compete with humans in creative work. However, technology is just now beginning to excel in producing sensible

and appealing objects. The world has been enthralled with generated art, and the works that have been produced are

genuinely remarkable. It dispelled a common misunderstanding that A.I. never be 'creative' in the same sense that humans

are. DALL-E 2 creates stunningly detailed digital sketches and images solely from a straightforward language cue. It was

first made available to the public earlier this month after being introduced in beta last year. The photographs are extremely

outstanding in terms of both quality and variety. It's like having access to a professional group of illustrators.

Another exciting and open source model is

Stable Diffusion,

that does photorealistic images really well, and was released to the public in August.

The code for it is available on GitHub

and can be run on your laptop. That has inspired many devs to tweak and finetune the model for their

own purposes, or build on top of it, which has unleashed many great experiments such as

dreambooth and midjourney.

The artistic ability has reached the point where it’s challenging for people to tell whether a human or an AI

created a painting, photo, magazine article or musical composition. In a survey, respondents did no better than chance at guessing whether a piece of music was composed by Bach or an AI. Do you think you would do better?

Given that most customers aren't really interested in who created a particular advertisement or logo, this is almost certainly going to have a significant impact on the world of content creation, including the commercial realm. It either functions or it doesn't, and the machine benefits from those circumstances. Among many other innovations, AI will give the world high-quality (automatic) personal assistants and driverless vehicles.

A Paradigm Shift

New methodologies, including diffusion models, have become easier to train and use for inference as the cost of

computing decreases. The scientific community keeps creating bigger models and better algorithms. The floodgates

for research and application creation have now been opened as developer access has increased from private beta to

open beta and even open source. The application layer is primed for an explosion of creativity as the platform

layer solidifies, models continue to get better, quicker, and cheaper, and model access is going toward free and

open source. These huge models will inspire a new wave of killer applications much like how mobile

unleashed new types of applications through new features like GPS, cameras, and on-the-go networking.

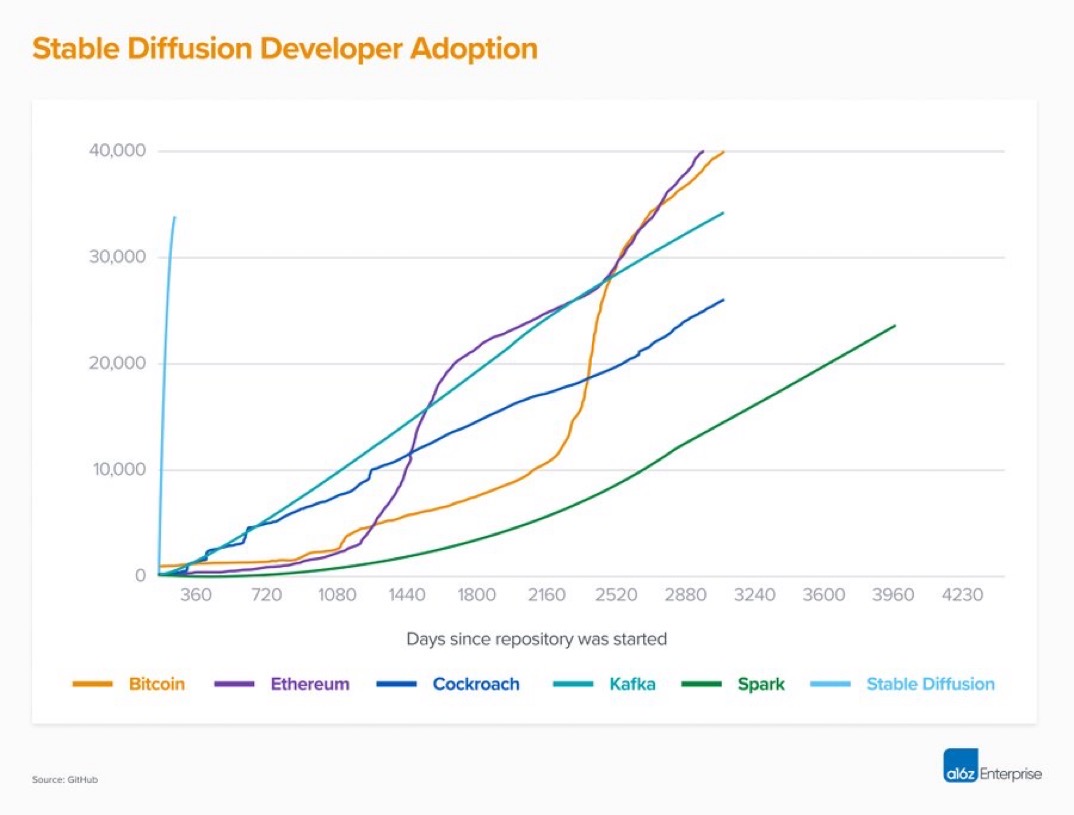

Stable Diffusion is the fastest growing open source project in history, outpacing even Bitcoin and Ethereum.

A recent comparison of developer adoption of various open-source infrastructure technologies done by GitHub and a16z

showed that Stable Diffusion accumulated 33,600 stars within a timeframe of 90 days, an unprecedented feat.

'Stable diffusion developer adoption'

This new category is called “Generative AI,” meaning the machine is generating something new rather than analyzing something that already exists, and is well on the way to much better than what humans create by hand. Certain functions may be completely replaced by generative AI, while others are more likely to thrive from a tight iterative creative cycle between human and machine—but generative AI should unlock better, faster and cheaper creation across a wide range of ductility and economic value—and commensurate market cap. The fields that generative AI addresses—knowledge work and creative work—comprise billions of workers. Generative AI can make these workers at least 10% more efficient and/or creative. Therefore, it has the potential to generate trillions of dollars of economic value. The dream is that generative AI brings the marginal cost of creation and knowledge work down towards zero, generating a vast labor industry that requires humans to create original work—from social media to gaming, advertising to architecture, coding to graphic design, product design to law, marketing to sales, copywriting to writing poetry— all is up for reinvention.

An 'aha' moment

On November 30th, OpenAI released ChatGPT, its prototype AI chatbot that has amazed a lot of user with

its human-like, detailed answers to inquiries—like drafting a contract between an artist and producer

and creating detailed code—and could revolutionize the way people use search engines by not just providing

links for users to sift through, but by solving elaborate problems and answering intricate questions. It is

able to solve complex coding tasks, passed the bar exam, and even a few medical certification exams. It can

also write poetry, create music, and even write a novel. It truly feels like a generalist when it comes to

text based knowledge work, and it is only the beginning.

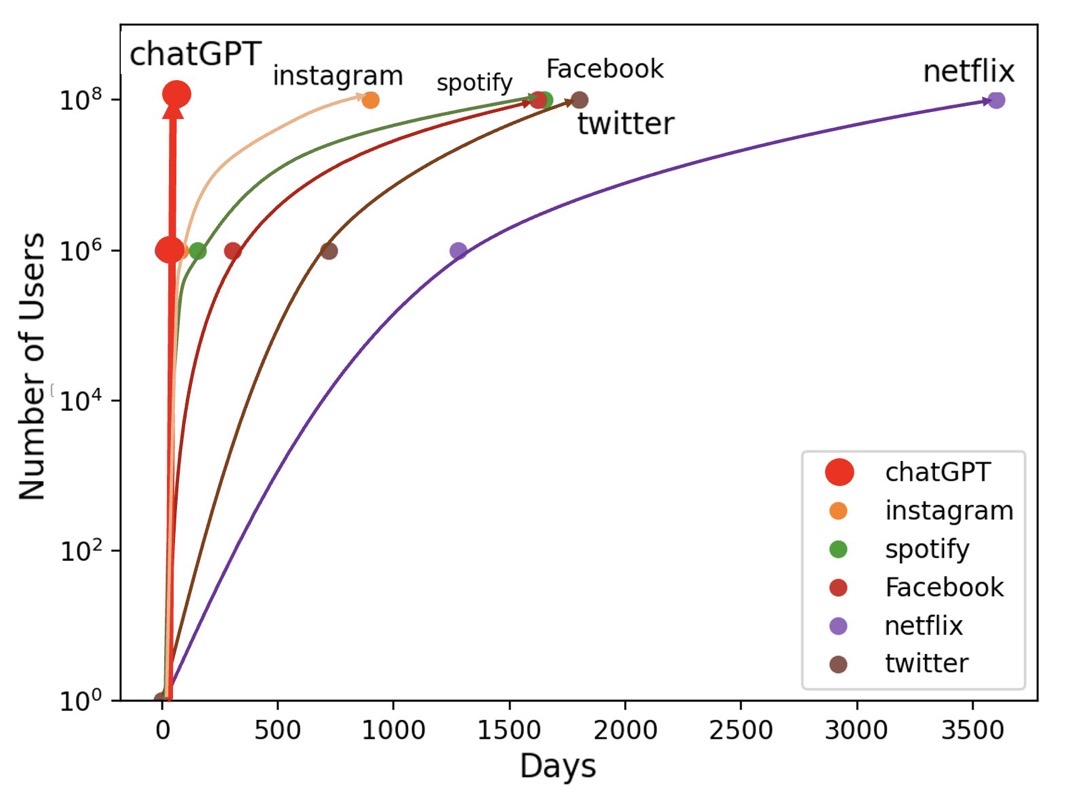

'ChatGPT is the fastest consumer app to reach a 100 million users. Source: @kylef_ '

At the time of writing this update, ChatGPT has reached 100 million users just two months after launching.

It had about 590m visits in January from 100 million unique visitors, according to analysis by data firm Similarweb.

Analysts at investment bank UBS said the rate of growth was unprecedented for a consumer app. “In 20 years following

the internet space, we cannot recall a faster ramp in a consumer internet app,” they wrote in the note, reported by Reuters.

In January, Microsoft announced a new multiyear, 10 billion dollar investment with OpenAI. The giant has

announced a new version of its search engine Bing, powered by an upgraded version of the same AI technology that

underpins chatbot ChatGPT. The company is launching the product alongside new AI-enhanced features for its Edge

browser, promising that the two will provide a new experience for browsing the web and finding information online.

I believe we are on the verge of a completely new approach in the way we interact with technology, and it is going to

be a game changer.

Many investors are capitalizing on the potentially transformative platform shift, like the smartphone or the

early days of the internet. These kinds of shifts greatly expand the total addressable market of people who

might be able to use the technology, moving from a few dedicated nerds to business professionals — and e

ventually everyone else. And venture investors, with their billions, are hellbent on making the latest round of

AI hype: Jasper, a writing assistant, announced a $125 million fundraising led by Insight Partners at a

$1.5 billion valuation Cohere, an AI foundation model company that competes with Microsoft-backed OpenAI, is in

talks to raise hundreds of millions of dollars in a funding round that could value the startup at more than $6 billion

Alphabet Inc.’s Google has invested almost $400 million in artificial intelligence startup Anthropic,

which is testing a rival to ChatGPT named 'Claude'. Deepmind plans to come out with it's own chatbot "Sparrow".

Quora has come out with it's own chatbot called "Poe". There is even an open source effort to create a

similarly powerful large language model.

AGI: The Endgame

We as humans have evolved to be general problem-solvers. We can learn new things and our learning in one area doesn’t require a fundamental rewriting of our code. Our knowledge in one area isn’t so brittle as to be degraded by our acquiring knowledge in some new area, or at least this is not a general problem which erodes our understanding again and again. The current generation of AI is limited to a specific task or set of tasks, such as video recommendation or playing chess- but it can't do both. Artificial General Intelligence (AGI) is designed to be able to learn any task that a human can, and we’re seeing the signs of moving in that direction. With AGI, machines would be able to learn and think like humans, making them far more efficient and effective at completing tasks. This could potentially lead to a future where machines can handle most of the world's work, leaving humans free to pursue other interests. While this may sound like a utopia, it is important to note that AGI would also bring with it a number of challenges, such as how to ensure that machines act in our best interests. Nonetheless, AGI has the potential to radically change the world as we know it and usher in a new era of human-machine cooperation.

So where does this all lead us? Just over 50 years ago, we sent humans to the moon using computers 100 times less powerful than the iPhone in your pocket today. As technology develops exponentially and begins to self-improve, it will eventually produce ever more advanced AI. What is conceivable seems to have no boundaries. Picture going back to that time when they were launching the Apollo missions and showing them the technology today. They would think it is magic. Now imagine, for example, that we invent a computer that is no smarter than the typical team of researchers at Stanford or MIT today, but that, because of how it operates in the digital space, is a million times faster and more efficient than the minds that created it. If you left it running for just a week, it would achieve 20,000 years' worth of human intellectual labor. If just 50 years was able to accomplish so much, what could 20,000? And in a week?

‘The Singularity’ is an event that is predicted to occur when technology becomes so advanced that it can create A.I. ‘s that are able to improve upon themselves, creating an exponential increase in intelligence. This will result in a rapid and exponential increase in technological progress that will eventually lead to a point where humans will no longer be able to comprehend its actions. ‘The Singularity’ is a term derived from quantum physics where the laws of physics break down and no longer apply, and it is this similar unpredictability regarding human comprehension of exponential technology that makes it a fitting name.